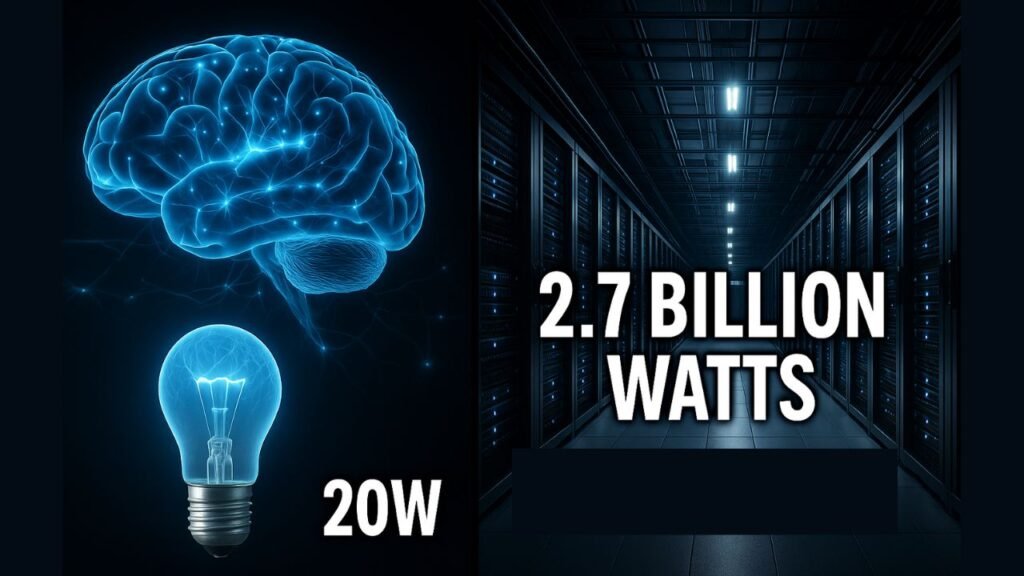

Imagine this: Your brain is processing complex thoughts, solving problems, and creating memories using just 20 watts of power – about the same as a bright LED bulb. Meanwhile, AI systems performing similar cognitive tasks consume 2.7 billion watts – enough to power an entire city.

This isn’t science fiction. It’s the shocking reality of our current technological landscape, where artificial intelligence systems require 135 million times more energy than the human brain to perform comparable tasks.

While tech giants race to build more powerful AI systems, they’re creating an unprecedented energy crisis. Google’s AI operations alone have increased their carbon emissions by 48% since 2019. Microsoft’s emissions jumped 29% due to data center expansion. And we’re just getting started.

But here’s what makes this story fascinating: the human brain represents the ultimate efficiency benchmark that could revolutionize how we think about artificial intelligence, sustainable technology, and even personal productivity. The lessons hiding in our heads might just save the planet – and transform how we work, learn, and solve problems.

Let’s dive deep into this energy efficiency battle that’s reshaping our digital future.

The Shocking Energy Comparison That Changes Everything

Your brain runs on less power than your smartphone charger. Let that sink in for a moment.

The human brain operates on approximately 12-20 watts of continuous power, drawing energy from glucose and oxygen delivered through your bloodstream. This biological masterpiece contains 100 billion neurons forming trillions of connections, processing information at roughly 1 exaflop per second – that’s 1,000,000,000,000,000,000 operations every single second.

Compare this to artificial intelligence systems:

ChatGPT’s daily operations consume 40 million kilowatt-hours – enough electricity to power 117 countries for an entire day. A single query to ChatGPT uses the equivalent energy of lighting 14 LED bulbs for one hour.

GPT-4’s training alone required 1,750 megawatt-hours – enough to power 160 average American homes for a full year. The process consumed 502 metric tons of CO2 equivalent, matching the lifetime emissions of 112 cars.

💡 Tweetable Quote: “Your brain outperforms a data center by 135 million times in energy efficiency. The most advanced computer you’ll ever own is sitting right between your ears.”

The Oak Ridge Frontier Supercomputer, currently the world’s most powerful computer, achieves 1 exaflop performance using 21 million watts – exactly 1 million times less efficient than your brain for the same computational capacity.

Pro Tip: To put this in perspective, if your brain consumed as much energy as current AI systems, you’d need to eat 2,700 pizzas per hour just to keep thinking.

The 2.7 Billion Watts Reality Check

The specific statistic of 2.7 billion watts comes from the Blue Brain Project at EPFL, which attempted to simulate just 10 million neurons – a tiny fraction of the human brain. Their supercomputer simulation required 270 kilowatts of continuous power and ran 30,000 times slower than biological brain speed.

When researchers calculated the energy requirements to simulate a full human brain at biological speed, the numbers became staggering: 2.7 × 10^13 times worse energy efficiency than the biological original.

This means that simulating your brain’s morning coffee decision would consume enough electricity to power New York City for several minutes.

The Science Behind Brain Efficiency: Nature’s Ultimate Processor

Why is your brain so impossibly efficient? The answer lies in 550 million years of evolutionary optimization, creating the most sophisticated energy management system on Earth.

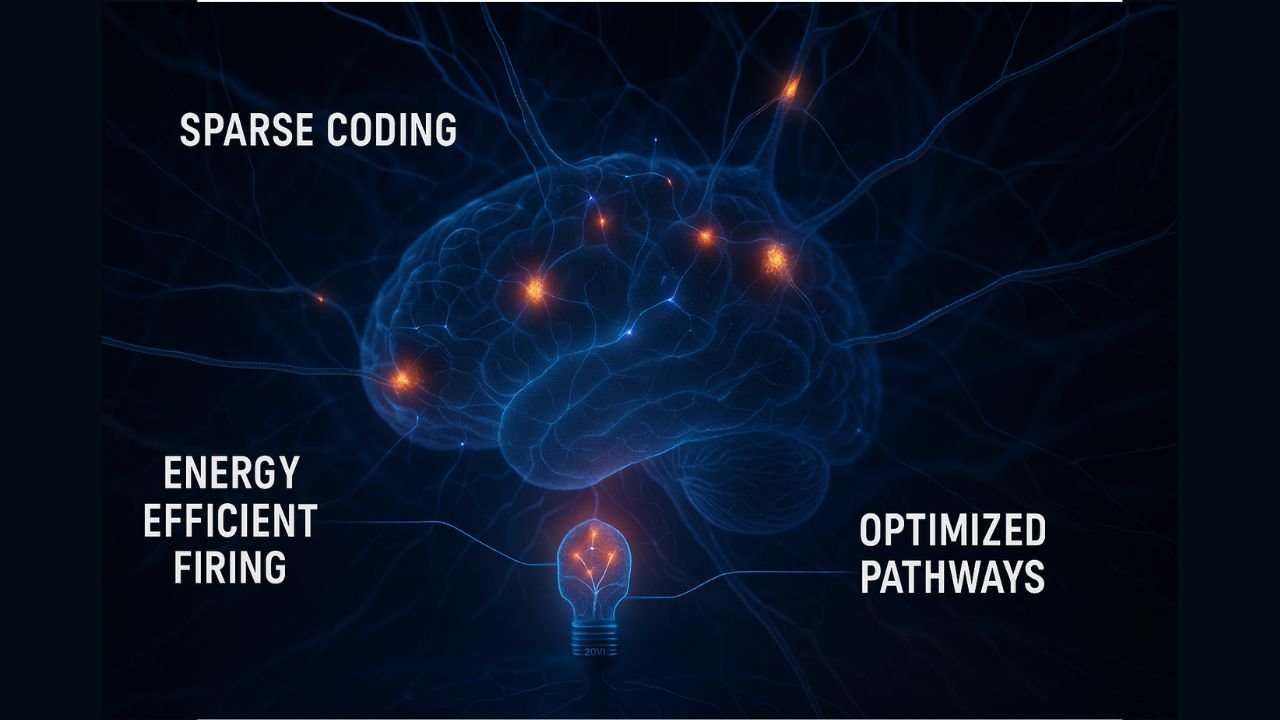

Sparse Neural Networks: Less is More

Unlike AI systems that activate millions of artificial neurons simultaneously, your brain uses sparse coding. Only about 1-2% of neurons fire at any given moment, dramatically reducing energy consumption while maintaining incredible processing power.

Recent research from MIT revealed that synaptic caching mechanisms reduce energy consumption by orders of magnitude during learning. Your brain essentially “remembers” frequently used neural pathways, making them more energy-efficient over time.

Pro Tip: This is why learning new skills becomes easier with practice – your brain literally rewires itself for maximum efficiency.

The Synaptic Energy Conservation System

Your brain employs several energy-saving strategies that put modern processors to shame:

Low-probability neurotransmitter release means that synapses only transmit signals when absolutely necessary. Studies show that synapses have only a 20-50% chance of releasing neurotransmitters when activated, preventing energy waste from unnecessary signaling.

Optimal excitatory/inhibitory balance maintains an 80/20 ratio that maximizes information transmission per energy unit. This balance allows 70% of brain energy to be allocated to spontaneous activity rather than basic maintenance.

Synaptic pruning eliminates energetically expensive connections that aren’t frequently used. During sleep, your brain actually removes up to 20% of synaptic connections that prove inefficient, streamlining neural networks for optimal performance.

💡 Tweetable Quote: “Your brain fires only 1-2% of neurons at once, while AI systems wastefully activate millions. Evolution taught us that smart computing means knowing when NOT to think.”

Integrated Processing and Memory: No Von Neumann Bottleneck

AI systems suffer from the Von Neumann bottleneck – the energy-expensive separation between processing and memory. Data must constantly move between CPU and RAM, consuming significant power.

Your brain eliminates this problem entirely. Synaptic weights serve dual purposes as both memory storage and processing elements. Each synapse simultaneously stores information and performs computations, creating an integrated system that eliminates energy-costly data movement.

Research shows that this integration allows the brain to perform hierarchical processing where local computations reduce the need for long-distance neural communication, further conserving energy.

The metabolic optimization system in your brain includes:

- Astrocyte-neuron lactate shuttles for efficient fuel delivery

- Mitochondrial positioning optimized for local ATP demands

- Activity-dependent energy allocation that prioritizes active brain regions

- Glucose and ketone metabolism providing flexible energy sources

💡 Tweetable Quote: “AI systems move data between memory and processors billions of times per second. Your brain eliminated this energy waste 550 million years ago.”

AI’s Energy Challenge: Why Silicon Struggles

Modern AI systems are essentially brute-force computing machines that achieve intelligence through overwhelming computational power rather than elegant efficiency.

The Training Energy Crisis

Large language model training consumes staggering amounts of energy. Meta’s Llama 3 training generated 2,290 metric tons of CO2 – equivalent to the lifetime emissions of 460 average cars.

The training process requires processing massive datasets multiple times through energy-intensive algorithms. Gradient computation and backpropagation – the core learning mechanisms in AI – demand exponentially more energy than biological learning processes.

Training inefficiencies include:

- Processing the same data hundreds or thousands of times

- High-precision arithmetic (32-bit floating point vs. brain’s low-precision processing)

- Sequential processing architecture limiting parallelization

- No equivalent to biological “sleep consolidation” for efficient learning

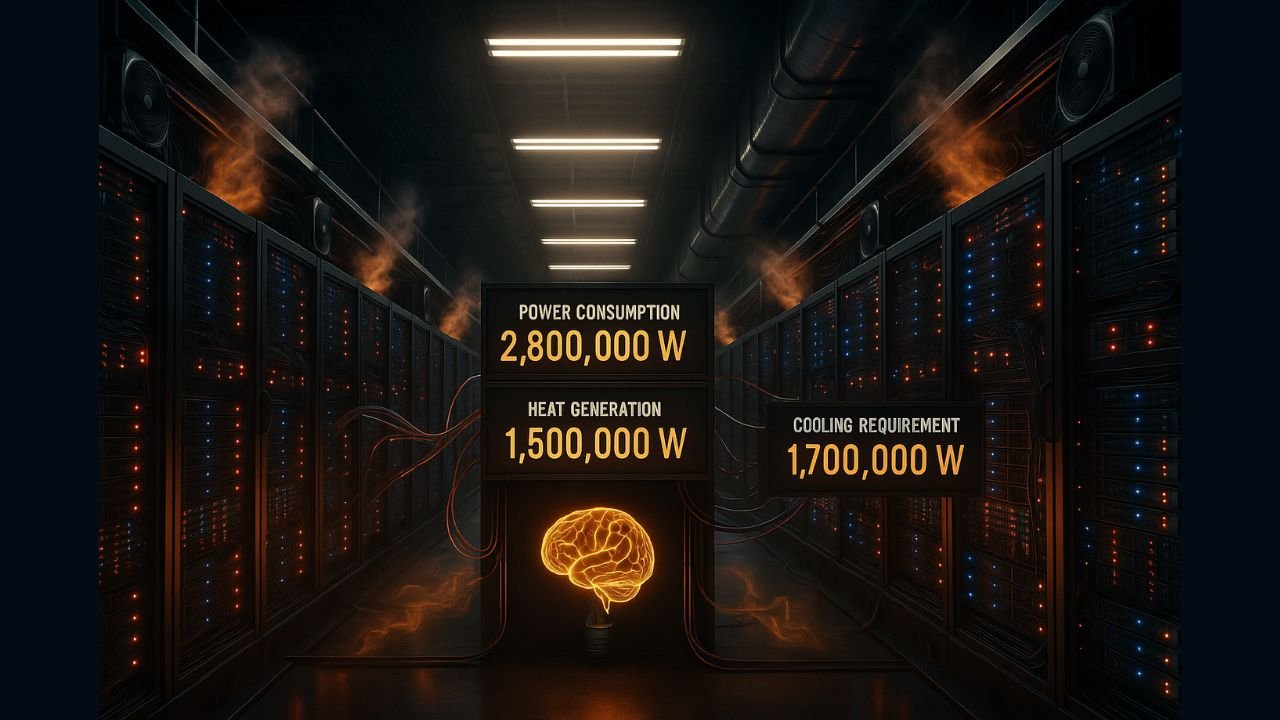

The Inference Energy Problem

Even after training, AI inference (actually using the models) consumes enormous energy. ChatGPT processes over 200 million queries daily, consuming approximately 40 million kilowatt-hours of electricity.

Each interaction with AI involves:

- Activating millions of artificial neurons simultaneously

- Moving data between memory and processors billions of times

- High-precision calculations for accuracy

- Cooling systems to prevent hardware failure

Pro Tip: The next time you use ChatGPT, remember that your single question uses enough energy to power a refrigerator for 3 hours.

Hardware Limitations: Why Silicon Can’t Match Biology

Current AI hardware faces fundamental constraints:

Precision overhead: AI systems use 16-32 bit floating-point arithmetic for accuracy, while biological neurons operate effectively with much lower precision. Research shows that energy consumption scales exponentially with precision requirements.

Memory wall effects: Modern processors spend up to 70% of their energy just moving data between memory and processing units. The brain’s integrated memory-processing architecture eliminates this waste entirely.

Cooling requirements: Data centers consume 40% of their total energy just on cooling systems to prevent overheating. Your brain maintains optimal temperature through efficient blood circulation.

💡 Tweetable Quote: “AI systems use sledgehammers where brains use scalpels. Precision has an energy cost, and biology found the sweet spot 100 million years before we invented computers.”

Practical Brain Optimization Techniques That Actually Work

Your brain’s efficiency isn’t fixed – you can optimize it using evidence-based techniques that boost cognitive performance while reducing mental energy waste.

The Single-Tasking Revolution

Multitasking can reduce productivity by up to 40% due to task-switching costs, according to research from Stanford University. Each time you switch between tasks, your brain must reorient attention, reload context, and rebuild focus – an energy-expensive process.

The solution: Single-tasking with strategic focus blocks.

Implement the 25-50 minute focus rule:

- Work on one task for 25-50 minutes

- Take 10-15 minute breaks between sessions

- Close unnecessary browser tabs and applications

- Turn off all notifications during focus blocks

Pro Tip: It takes an average of 23 minutes and 15 seconds to regain full focus after an interruption. Protecting your attention is protecting your brain’s energy.

Research from the University of California shows that single-tasking improves accuracy, reduces errors, and enhances creativity while consuming significantly less cognitive energy than multitasking.

Nutrition for Cognitive Efficiency

Your brain consumes 20% of your body’s total energy, making nutritional optimization crucial for peak performance.

Evidence-based nutrition strategies:

Protein timing matters: Studies show that adequate protein intake (0.8-1.2g per kg body weight daily) positively impacts executive function and working memory, especially under high cognitive demands.

Omega-3 fatty acids are critical for brain energy metabolism. Research shows that EPA and DHA supplementation (1-2g daily) improves cognitive efficiency and reduces mental fatigue.

Mediterranean diet patterns are linked to reduced cognitive decline risk and improved energy metabolism. Key components include:

- Omega-3 rich fish (2-3 times weekly)

- Antioxidant-rich berries and leafy greens

- Nuts and seeds for sustained energy

- Limited processed foods that cause energy spikes and crashes

Pro Tip: Your brain runs almost exclusively on glucose, but ketones can provide up to 60% of brain energy during periods of metabolic flexibility. Intermittent fasting or low-carb periods can enhance cognitive efficiency.

Exercise: The Brain’s Energy Upgrade

Aerobic exercise acts like a software update for your brain’s efficiency. Research shows that 120-150 minutes of moderate exercise weekly produces measurable improvements in cognitive performance.

How exercise optimizes brain energy:

- Increases brain-derived neurotrophic factor (BDNF) by up to 300%

- Enhances prefrontal cortex and medial temporal cortex volume

- Improves mitochondrial efficiency in brain cells

- Increases slow-wave sleep quality for better cognitive recovery

The minimum effective dose: 30 minutes of moderate aerobic exercise, 5 days weekly. This can be as simple as brisk walking, cycling, or swimming.

Timing optimization: Exercise 1-2 hours before sleep improves recovery without interfering with rest. Morning exercise enhances cognitive performance throughout the day.

Sleep: The Ultimate Brain Efficiency Hack

Seven hours of sleep is associated with optimal cognitive performance, according to large-scale studies analyzing over 500,000 participants. Both too little (less than 6 hours) and too much (more than 8 hours) sleep correlate with cognitive decline.

Sleep’s energy optimization functions:

- Synaptic pruning removes inefficient neural connections

- Glymphatic system activation clears metabolic waste

- Memory consolidation transfers information to energy-efficient storage

- Neurotransmitter regulation rebalances brain chemistry

Pro Tip: Sleep consistency matters more than duration. Maintaining regular sleep and wake times within 30 minutes daily optimizes your brain’s energy management systems.

Sleep environment optimization:

- Temperature: 65-68°F (18-20°C) for optimal brain cooling

- Darkness: Complete darkness or blackout curtains

- Quiet: Use white noise or earplugs if necessary

- Comfortable mattress and pillows supporting proper alignment

💡 Tweetable Quote: “Your brain spends 1/3 of its time in maintenance mode during sleep. Skip sleep optimization, and you’re running on a partially charged battery all day.”

Stress Management for Cognitive Efficiency

Chronic stress dramatically reduces brain efficiency through sustained cortisol elevation, which impairs memory formation, reduces attention span, and increases cognitive energy consumption.

Evidence-based stress reduction techniques:

Meditation and mindfulness: Just 10-20 minutes daily of meditation improves attention regulation and reduces the brain’s default mode network activity – saving cognitive energy throughout the day.

Deep breathing exercises: 4-7-8 breathing patterns (4 seconds in, 7 seconds hold, 8 seconds out) activate the parasympathetic nervous system, reducing cognitive load and improving focus.

Nature exposure: Studies show that 20 minutes in natural environments reduces cortisol levels and improves cognitive performance for up to 2 hours afterward.

AI Efficiency Innovations and the Path Forward

The future of AI isn’t about building bigger, more power-hungry systems – it’s about learning from the brain’s efficiency secrets and implementing them in silicon.

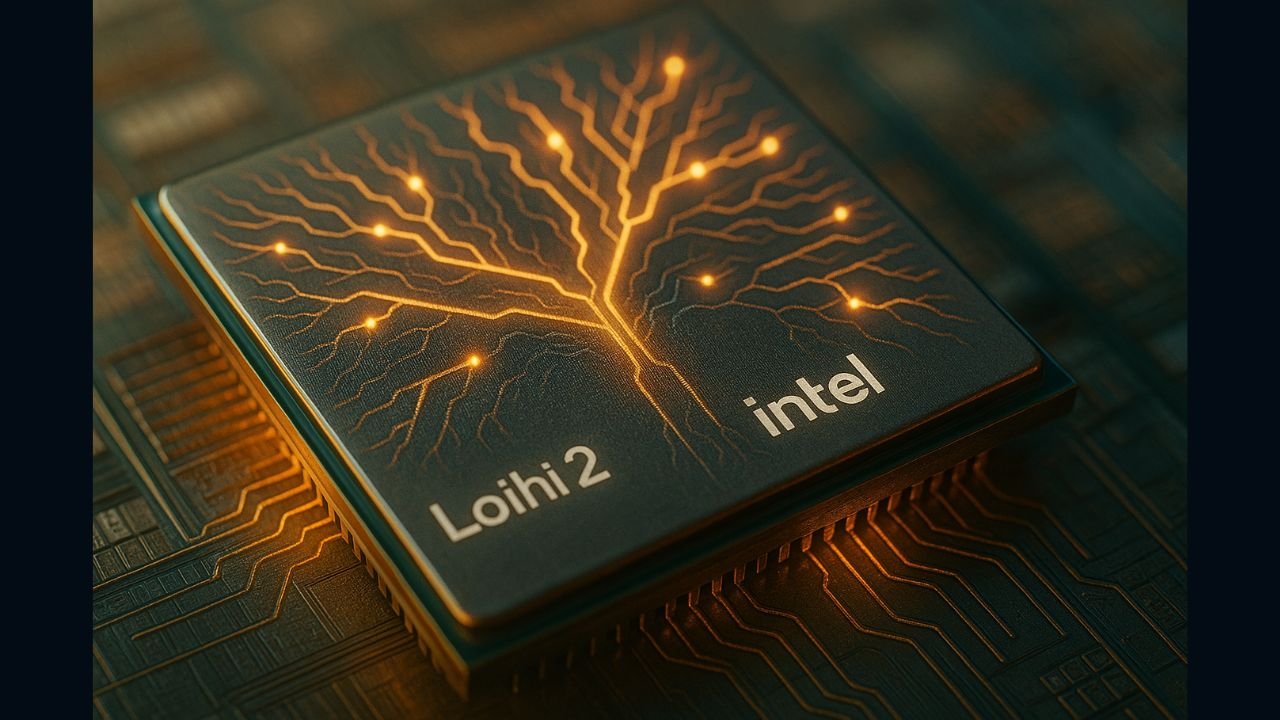

Neuromorphic Computing: Silicon That Thinks Like Biology

Neuromorphic computing represents the most promising approach to bridging the efficiency gap between brains and AI systems. These brain-inspired chips could achieve 1000x energy efficiency improvements over current AI hardware.

Leading innovations:

Intel’s Loihi 2 chip supports up to 1 million neurons and 120 million synapses while consuming just 1-10 watts of power. Intel’s Hala Point system, featuring 1,152 Loihi 2 processors, delivers 1.15 billion neurons – approaching 1% of human brain capacity.

IBM’s NorthPole processor runs 22x faster than GPUs while using 25x less energy for AI inference tasks. The chip integrates memory and processing like biological neurons, eliminating the Von Neumann bottleneck.

The neuromorphic computing market is growing at 108% annual growth rate, with applications spanning robotics, healthcare, and edge devices.

Pro Tip: Companies like BrainChip already offer neuromorphic processors that run on milliwatts of power – enough for years of battery life in edge devices.

Photonic Computing: Light-Speed AI Processing

Photonic processors use light instead of electrons for AI computations, offering dramatic efficiency improvements. MIT researchers developed a fully integrated photonic processor that completes neural network computations in less than 0.5 nanoseconds with 92% accuracy.

Key advantages:

- 10-fold reduction in both footprint and energy consumption

- Natural parallelism enables simultaneous matrix operations

- Optical matrix multiplication at 100 GHz rates

- No electrical resistance or heat generation

Lightmatter’s Envise processor represents the world’s first general-purpose photonic AI accelerator, handling 6.4 Terabits per second of optical interconnections in a standard server format.

For content creators looking to optimize their workflow, tools like Fliki and Pictory already use AI acceleration…

Edge Computing: Bringing AI Closer to Data

Edge AI processing reduces energy consumption by eliminating the need to transmit data to distant data centers. The edge AI market is projected to reach $221.51 billion by 2032, growing at 33.93% annually.

Efficiency gains from edge processing:

- Local processing eliminates data transmission energy costs

- Specialized chips like Hailo-8 deliver 26 TOPS at 2.5W power consumption

- Real-time processing without cloud latency or bandwidth requirements

By 2025, 55% of AI data analysis will occur on edge devices rather than in energy-intensive data centers.

Quantum Computing: The Ultimate Efficiency Frontier

Quantum computers could reduce AI data center energy consumption by 12.5% according to Cornell University research. While still in early stages, quantum systems solve certain optimization problems exponentially faster than classical computers.

Quantum advantages for AI:

- Quantum parallelism processes multiple possibilities simultaneously

- Optimization algorithms excel at AI training and inference

- Energy consumption in kilowatts vs. megawatts for supercomputers

Timeline expectations: Quantum-classical hybrid systems for specific AI tasks are expected by 2025-2027, with broader applications following by 2030.

💡 Tweetable Quote: “The future of AI isn’t bigger data centers – it’s chips that think like brains, process with light, and compute with quantum mechanics.”

Environmental and Economic Implications: The Sustainability Crisis

AI’s energy consumption is creating an unprecedented sustainability challenge that threatens global climate goals while imposing massive economic costs on consumers and businesses.

The Carbon Footprint Reality Check

Global data centers consumed 415 terawatt-hours in 2024 – equivalent to 1.5% of global electricity consumption. AI-specific servers in the US alone consume 53-76 terawatt-hours annually – enough to power 7.2 million homes.

Carbon emissions are accelerating rapidly:

- Google’s emissions increased 48% since 2019 due to AI expansion

- Microsoft’s emissions jumped 29% from data center growth

- Data center CO2 emissions may double between 2022 and 2030

Water consumption adds another layer to the environmental impact. Google consumed 5 billion gallons of freshwater in 2022 for data center cooling, while Microsoft’s water usage increased 34% in a single year.

Pro Tip: Every 10-50 ChatGPT queries consume the equivalent of one bottle of water for cooling requirements.

Economic Costs: Who Pays for AI’s Energy Appetite?

The infrastructure requirements for AI are staggering:

- Microsoft and OpenAI’s Stargate project: $100 billion for AI infrastructure

- US utilities: $50 billion investment in new generation capacity just for data centers

- Goldman Sachs estimate: $720 billion in grid spending through 2030

These costs are being passed to consumers:

- New Jersey residents: Up to 20% electricity bill increases

- Virginia ratepayers: Additional $37.50 monthly due to data center demand

- Data center electricity demand: Expected to rise from 4.4% to 12% of US consumption by 2028

The Renewable Energy Race

Tech companies are scrambling to meet sustainability commitments:

Google achieved 100% renewable energy matching since 2017 but abandoned its net-zero goal in July 2024 due to AI expansion challenges.

Microsoft committed to carbon negative operations by 2030 but has seen emissions increase 29% since 2020 due to data center expansion.

The problem: AI energy growth is outpacing renewable energy deployment. While tech companies contract massive renewable energy portfolios, the sheer scale of AI expansion makes net-zero goals increasingly difficult.

💡 Tweetable Quote: “AI systems are getting smarter, but they’re making the planet dumber about energy use. We need efficiency innovation, not just bigger data centers.”

Practical Applications: When to Use Your Brain vs AI

The most effective approach isn’t choosing between human intelligence and AI – it’s strategically combining both for maximum efficiency and minimum energy waste.

When creating video content, AI tools like Synthesia handle heavy processing while you focus on creative direction…

The Decision Matrix: Human vs AI vs Hybrid

Use your optimized brain when:

- High-stakes decisions requiring ethical judgment

- Creative direction and emotional intelligence

- Novel situations without historical precedent

- Relationship building and stakeholder management

- Complex interpretation of ambiguous information

Use AI when:

- Large dataset analysis and pattern recognition

- Routine, repetitive tasks with clear parameters

- 24/7 availability requirements

- Predictive analytics and risk assessment

- Initial content generation and variations

Use hybrid human-AI systems when:

- Complex decisions benefiting from both data and intuition

- Creative projects requiring both generation and direction

- Learning and improvement opportunities

- Regulatory compliance requiring human oversight

Business Implementation Framework

Start with high-impact, low-complexity applications:

Customer Service: AI handles initial queries and data gathering, humans manage complex issues and relationship building. Companies report 30% reduction in response times with this approach.

Content Creation: AI generates initial drafts and variations, humans provide creative direction and final approval. This hybrid approach can increase productivity by 40% while maintaining quality.

Data Analysis: AI processes large datasets and identifies patterns, humans interpret results and make strategic decisions. Organizations see 60% faster insights with maintained accuracy.

Pro Tip: Pilot projects averaging $70,000 show better ROI than large-scale implementations exceeding $750,000. Start small, measure results, then scale successful approaches.

Personal Productivity Optimization

The Cognitive Load Management System:

Morning (Peak Brain Energy): Deep work on most important tasks before checking communications. Your brain has maximum efficiency for 2-4 hours after waking.

Midday (Moderate Brain Energy): Use AI assistants for research, scheduling, and routine communications. Let AI handle information processing while you focus on decision-making.

Afternoon (Lower Brain Energy): Collaborative work and meetings when cognitive energy naturally dips. Social interaction requires less intensive brain processing.

Evening (Recovery Mode): Planning and reflection using AI tools for organization while avoiding stimulating technology 1-2 hours before sleep.

The 80/20 Rule for Human-AI Collaboration: Use your brain for the 20% of tasks that require creativity, judgment, and relationship skills. Let AI handle the 80% of routine tasks that consume cognitive energy without adding strategic value.

Tools like Beehiiv help manage information efficiently while VPN protection secures your data…

💡 Tweetable Quote: “Your brain is Ferrari-level hardware. Don’t waste it on Honda Civic tasks. Use AI for the mundane, save your cognitive horsepower for what matters.”

Frequently Asked Questions: Brain vs AI Energy

For learning more about AI efficiency, platforms like Coursera offer specialized courses…

Q1: How much energy does my brain actually use compared to my phone?

Your brain uses about 20 watts continuously – roughly the same as your phone charger when plugged in. However, your phone’s processor only uses 2-3 watts during intensive tasks, making your brain about 7-10 times more power-hungry than your smartphone. The difference? Your brain is processing vastly more complex information simultaneously.

Q2: Why can’t we just build computers that work like brains?

We’re trying, but biological systems are incredibly complex. Current neuromorphic chips like Intel’s Loihi can simulate about 1 million neurons, while your brain has 100 billion neurons. The challenge isn’t just scale – it’s replicating the precise energy management, learning mechanisms, and integration that took evolution 550 million years to perfect.

Q3: Will AI energy consumption bankrupt the planet?

Not bankrupt, but it could strain energy grids significantly. Data centers may consume 12% of US electricity by 2028, up from 4.4% today. However, efficiency innovations like neuromorphic computing could reduce AI energy consumption by 1000x, making sustainable AI development possible.

Q4: Can I actually make my brain more energy-efficient?

Absolutely. Research shows that single-tasking, optimal nutrition, regular exercise, and quality sleep can improve cognitive efficiency measurably. The brain’s energy consumption doesn’t change much, but you can dramatically improve the output per watt through lifestyle optimization.

Q5: What’s the biggest energy waste in current AI systems?

The Von Neumann bottleneck – constantly moving data between memory and processors. Up to 70% of AI system energy goes to data movement rather than actual computation. Biological brains integrate memory and processing, eliminating this massive inefficiency.

Q6: How long until AI matches brain efficiency?

Conservative estimates suggest 2040-2050 for approaching brain-level efficiency in specific tasks. Neuromorphic computing could achieve 100-1000x improvements within the next decade, getting us within 2-3 orders of magnitude of biological efficiency by 2030.

Q7: Should I avoid using AI because of its environmental impact?

Strategic use is more important than avoidance. Use AI for tasks that truly benefit from its capabilities – data analysis, pattern recognition, routine automation – while using your optimized brain for creative and strategic work. The environmental impact per query is significant, but the productivity gains can justify thoughtful usage.

Q8: Why don’t tech companies just build more efficient AI systems?

They are, but it’s extremely challenging. Current AI systems are based on 1940s computer architecture that separates memory and processing. Rebuilding from biological principles requires entirely new hardware, software, and manufacturing processes. Companies are investing billions in neuromorphic and photonic computing research.

Q9: Can quantum computers solve AI’s energy problem?

Potentially, for specific applications. Quantum computers excel at optimization problems that are central to AI training and inference. Early research suggests 12.5% energy reduction in data centers, with greater improvements possible as quantum technology matures. However, quantum systems are still years away from practical AI applications.

Q10: What can individuals do to push for more efficient AI?

Demand transparency and efficiency from AI service providers. Support companies investing in neuromorphic and sustainable computing research. Optimize your own cognitive performance to reduce unnecessary AI dependency. Choose AI tools that publish energy consumption data and prioritize efficiency alongside performance.

The Future is Efficient: Your Next Steps

The brain vs AI energy battle isn’t just about technology – it’s about the future of human productivity, environmental sustainability, and the next evolution of intelligence itself.

The remarkable truth: Your brain remains the most sophisticated computer ever created, operating with an efficiency that makes supercomputers look like energy-wasting dinosaurs. While AI systems require city-sized power plants, your thoughts flow on the power of a light bulb.

But this isn’t a competition where one side must win. The future belongs to those who master the integration – combining optimized human intelligence with strategically deployed artificial intelligence for maximum impact and minimum energy waste.

Your immediate action plan:

- Optimize your biological hardware using the evidence-based techniques in this guide

- Use AI strategically for data processing and routine tasks while preserving cognitive energy for high-value work

- Support companies investing in energy-efficient AI technologies

- Stay informed about neuromorphic computing and sustainable AI developments

The efficiency revolution is coming. Brain-inspired computers that use light instead of electrons, quantum systems that process multiple realities simultaneously, and neuromorphic chips that learn like biological neurons are moving from research labs to real-world applications.

Companies that master this integration first – combining human cognitive optimization with efficient AI deployment – will gain competitive advantages measured in years, not months.

The choice is yours: Continue the energy-wasteful approach of current AI systems, or embrace the brain’s efficiency secrets to create a sustainable, productive future.

Ready to optimize both your biological and artificial intelligence? The tools and techniques are available now. The only question is whether you’ll use them before your competitors do.

💡 Final Tweetable Quote: “The future isn’t human vs AI – it’s optimized humans working with efficient AI. Master both, and you’ll have superpowers that consume less energy than a light bulb.”

The brain vs AI energy battle has a clear winner: the combination of both, optimized for maximum efficiency and minimum waste. Your superintelligent future runs on 20 watts.